Manual Testing vs Automated Testing: Pros, Cons, and Use Cases

Automated software testing sounds way better than manual, right?

Well, not exactly.

Despite the speed, scale, and cost advantages of automation testing, there are still cases where the manual assessment of software quality and performance is beneficial.

Read on to learn which scenarios best suit manual testing and which ones are ideal for automated testing.

This article will also discuss what manual testing and automated testing are and their basic differences.

Without further ado, let’s jump right in!

What is manual testing?

Manual testing is a software testing method that involves Quality Assurance (QA) analysts individually executing tests to identify bugs and feature issues before an application is released. In this process, human testers validate key features by developing and executing test cases and generating error reports without automation tools.

What is automated testing?

Automated testing, or automation testing, is a software testing approach that uses tools and scripts to streamline the testing process. Though developers need to manually create the test scripts initially, these sets of code can run independently upon execution. As such, automated testing empowers developers to run more test cases on more devices or platforms than manual testing.

But the difference between manual and automated testing goes beyond scale. The next section summarizes their distinctions.

Manual testing vs automated testing: Essential distinctions for business considerations

Our product strategists and developers of mobile and web apps collaborate closely to ensure our clients get the most return on their hard-earned investment.

That’s why when we conduct testing for mobile apps and other platforms, we take note of the following key differences to make best use of our clients’ time and financial resources:

| Criteria | Automated Testing | Manual Testing |

|---|---|---|

| Initial setup speed | Slower (due mainly to initial scripting) | Faster |

| Overall speed | Faster | Slower |

| Initial investment | More expensive | Less expensive |

| Long-term cost | Less expensive | More expensive |

| Deadlines | Less chance of missing deadlines | Higher risk of missing deadlines |

The table above shows, in simple terms, the business implications of manual testing vs automated testing.

But from a technical standpoint, the differences between manual and automated testing are more complex. They both have their strengths, weaknesses, and ideal use cases. I will cover all these in the succeeding sections.

Let’s start with manual testing.

When to use manual testing?

Manual testing is particularly effective for evaluating functionalities, user interfaces, website and application behaviors, user acceptance, and overall user experience.

Other scenarios where manual testing excels include:

- Cases where flexibility is crucial, as QA testers can quickly assess and provide immediate feedback

- Short-term projects where the effort and cost of setting up automated tests are not justified

- Usability testing, where testers use a sample group of people to assess how easy the software is to use and how effective it is in fulfilling tasks

Source: Nielsen Norman Group on YouTube

Pros of manual testing

- Versatility. Manual test cases can be applied across various scenarios, enhancing test coverage.

- Swift feedback. It offers quick and precise feedback, aiding in rapid issue identification and resolution.

- Adaptability. Manual testing easily adjusts to changes in user interfaces, accommodating evolving software needs.

Cons of manual testing

- Incomplete defect detection. Manual testing cannot guarantee 100% test coverage, potentially missing some defects.

- Time consumption. Comprehensive manual test cases take considerable time to cover all functionalities.

- Non-reusability. Manual test processes cannot be recorded for reuse, limiting efficiency and scalability.

- Reliability issues. Human-conducted and designed tests increase the risk of errors and variability.

Now that you are better acquainted with the strengths, weaknesses, and ideal use cases for manual testing, it’s time to delve deeper into automated testing.

When to use automated testing?

Automated testing excels in scenarios requiring repetitive tasks, such as regular regression tests for ensuring new code doesn’t negatively affect software functionality. Testing using automation tools is also beneficial when human resources are limited, ensuring tests are completed within deadlines even with fewer dedicated testers available. Automated testing is also most suited for load testing, when developers assess how the software handles requests from multiple users at once. Check out the video below to see an example of an automated testing tool and how it helps with more efficient load testing of websites.

Source: LoadView Testing on YouTube

Pros of automated testing

- Test process recording. Automation allows tests to be recorded, facilitating reuse and efficiency.

- Enhanced bug detection. The use of automation in testing tends to uncover more defects within the software compared to manual testing.

- Elimination of fatigue factor. Since automated tests are mostly software-driven, testing quality remains constant regardless of how tired testers are.

- Faster regression testing. Automation tools are ideal for repetitive regression testing, significantly reducing time.

- Expanded test coverage. Automated testing ensures that every aspect of the software being evaluated is assessed.

Cons of automated testing

- Tendency to commit errors. Automated tests can still make mistakes, potentially leading to overlooked software defects or missed suboptimal code elements.

- Need for skilled staff. Conducting automated tests requires trained personnel proficient in certain programming languages and testing methodologies.

- Limited capacity to test visual elements. Automation testing tools struggle to equal human testers in assessing visual aspects of software or apps, such as color, font size, and button dimensions.

Now that you’ve seen the pros, cons, and ideal applications of manual and automated testing, it’s easy to think it’s “one or the other.”

But as you will find out in the succeeding parts of this article, you don’t always have to pick sides in the manual testing vs automated testing faceoff. For certain software development projects, it is actually necessary to leverage the best of both worlds.

When to use both manual and automated testing?

Combining manual and automated testing in the following scenarios makes optimal results within reach:

- Large-scale app development projects

- Testing of complex apps

- Continuous Integration / Continuous Delivery (CI/CD) pipelines

Large-scale app development projects

One of the simplest examples of a large-scale project is the testing and development of an e-commerce app.

This type of application can be challenging to test, given that it can hold thousands to millions of products in its database. Ensuring that the buttons and features work well for each product can be time-consuming when done manually. Automated testing tools excel at this routine and high-volume task.

On the other hand, manual tools and methods are helpful for usability testing. For instance, testers might try to determine how the e-commerce app will function if a user adds too many products to the app’s cart. Humans (and manual testing) are more adept at assessing the app’s performance in such an unconventional scenario.

Speaking of e-commerce apps, these are one of our specialties. In fact, Australian e-commerce giant MyDeal worked with us to develop a mobile app that became instrumental in increasing the company’s value to around $160 million. To learn more about this success story, check out the MyDeal case study.

Testing of complex apps

Imagine an app that provides basic banking services on top of stock investment options. The complexity of this kind of application needs the complementary powers of manual and automated testing.

Manual testing is especially ideal for assessing the app’s performance in edge cases like sudden and frequent fluctuations in stock prices. Will the app show the right account balance in such a case? Manual testing methods and techniques could provide the right answer for this scenario.

Automated testing is more appropriate for repetitive and high-scale tasks. Testing the investment app’s performance and behavior when dozens or hundreds of users log in and transfer funds from different devices is an ideal scenario for automated testing.

Source: Shares.io

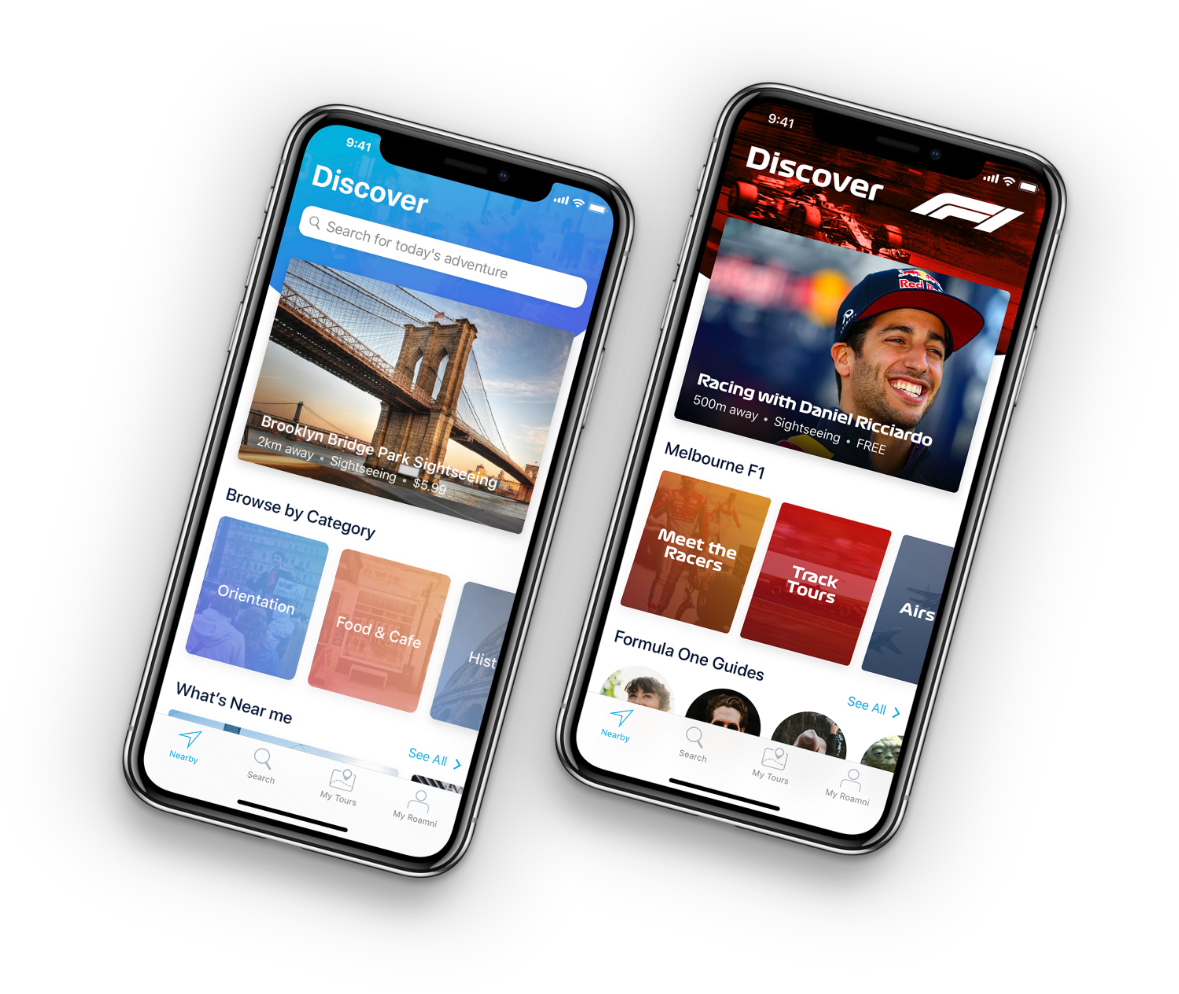

Continuous Integration / Continuous Delivery (CI/CD) pipelines

How do you ensure your app or software still functions well despite multiple changes in code across time and by multiple teams?

That’s where Continuous Integration / Continuous Delivery (CI/CD) pipelines come in.

CI/CD simplifies the process of coding, testing, and deploying applications by providing a unified repository for all work and automated tools. Through CI/CD pipelines, development teams can consistently integrate and test code updates, ensuring optimal software functionality regardless of changes.

Source: Datascientest

As you can see, automation tools are crucial in CI/CD. But in testing software, these tools need to work with manual ones to ensure applications perform at their best and are usable despite multiple iterations.

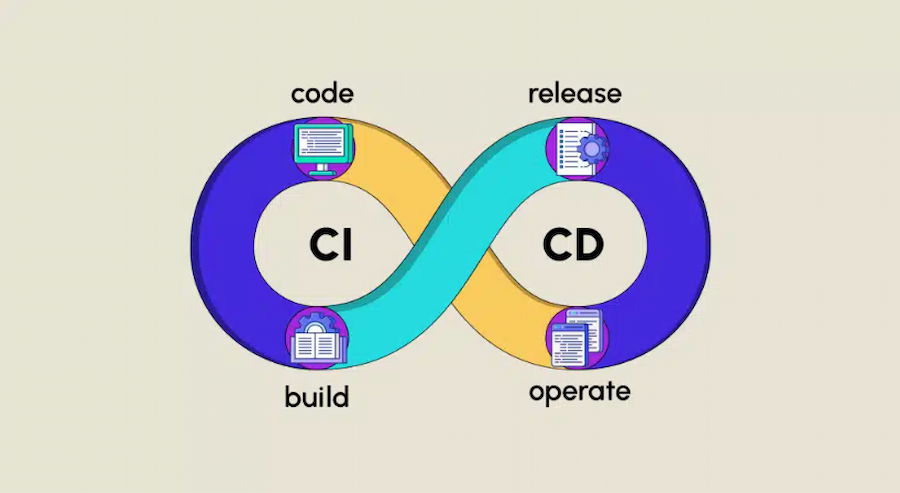

One of the best examples to illustrate how manual and automated testing synergize in CI/CD pipelines is the development of software for self-driving cars.

If a self-driving car were a human being, the software that runs it would be its mind. Its senses would be the cameras and other sensors that enable the vehicle to monitor and track environmental elements like roads, pedestrians, and obstacles.

Source: Facts&Reasons

Given the ever-changing nature of places and driving conditions, developers need to regularly update the autonomous vehicle’s software and re-test it to ensure it can correctly and safely control the car to take passengers to the right destination.

Since software for self-driving cars needs regular updates and consequent testing, its developers can do their work more efficiently by using AI testing tools in the software’s CI/CD pipeline. These tools enhance automation by intelligently identifying edge cases and optimizing regression cycles. Without automation, it will take longer for developers to keep track of changes in the software’s code and make the changes usable for testing.

In particular, these pipeline tasks are most suited to automated testing:

- Handling routine tasks. A self-driving car’s software needs to constantly process the data it gathers from cameras and other vehicle sensors. Testing the functioning of this routine task is best left to automated systems to enable human testers to focus on more complex issues.

- Simultaneous testing of multiple systems. Automated tools can cover the vast number of tests needed for the interconnected systems in a self-driving car, ensuring thorough coverage. For instance, computerized tools can test how software processes sensor data while assessing how the code controls the car’s power systems and wheels.

But despite the superiority of automated testing systems in some respects, there is still room for manual testing in an autonomous vehicle’s software development pipeline, particularly in tasks like:

- Accounting for edge cases. Automated tests may miss unexpected scenarios, such as sudden weather changes or unconventional pedestrian actions. Manual testers can design tests for these situations, identifying potential weaknesses in software that may cause injury or death.

- Assessing user experience. Automation testing tools can’t fully account for the human experience. Manual testers can evaluate more nuanced aspects of autonomous vehicle operations, like how the software’s control of car speed and direction ensures passenger comfort. Testers may also identify points of confusion in the car software’s user interface, consequently reducing inconveniences or accidents.

Test new avenues of success

There is no straightforward winner in the manual testing vs automated testing matchup.

Despite the high performance, speed, and scaling advantages of automated testing systems, manual testing is still relevant because some aspects of the user experience remain beyond the reach of automation. It’s crucial to remember this because user experience is as crucial as technical performance when it comes to software development.

Ultimately, both manual and automated testing methodologies complement each other and are equally relevant to producing robust, high-performing, and user-friendly software systems and applications.

But do you know what else produces top-notch software?

A user-centered app design and development process is an ideal partner for top-notch software testing.

Without design prototypes, you are less likely to prevail in a market with at least 9 million apps competing for users’ attention. Integrating testing with design and development maximizes the chance that people will choose your software over a myriad of other options.

Designs also excel in attracting funding for app testing and development projects. Tour tech startup Roamni acquired around $3 million in funding, thanks mostly to a design prototype.

The next time you embark on a software development project, find the right kind of app developers and designers who can also help you navigate the nuances of manual testing vs automated testing.

To jumpstart your search for the right partner in software development, try booking a free consultation with us. Test how our alternative avenues for app business success matches your dreams and goals.

People also ask about manual and automated testing

Whether you’re weighing costs, team resources, or release speed, here are the most common questions app founders and QA teams ask about choosing the right testing approach.

1. What’s the real ROI of automated testing vs. manual testing?

The difference is dramatic. According to Virtuoso QA’s ROI analysis, manual testing delivers a negative ROI of -0.5%, at scale, while traditional automation (Selenium-based) returns around 56% ROI. AI-native test automation can deliver over 1,160% ROI by eliminating maintenance overhead. Note: figures are vendor-reported.

2. Can automated testing fully replace manual testing?

No. Automated testing cannot fully replace manual testing. Automation excels at repetitive regression tests and large-scale load testing, but it cannot evaluate visual elements, user experience nuances, or edge-case scenarios the way human testers can. A combination of both approaches delivers the most reliable results.

3. What types of testing should always be done manually?

Four testing types still require human testers: usability testing (assessing real user interactions), exploratory testing, (finding bugs without predefined scripts), ad hoc testing for edge cases like sudden input changes, and user acceptance testing (UAT) where stakeholders validate real-world functionality. These rely on human intuition that automation cannot replicate.

4. How much does test automation save compared to manual testing?

Automation delivers significant savings at scale.

Virtuoso QA’s modelling shows an 86% cost reduction compared to manual testing and a 63% reduction compared to traditional automation frameworks for a mid-market SaaS company. The biggest savings come from eliminating test maintenance overhead; teams using AI-native tools spend 95% less time on maintenance. Note that figures are vendor-reported.

5. When should a startup invest in automated testing?

Invest in automation when your app requires frequent regression testing, has a growing codebase, or runs in a CI/CD pipeline Traditional automation frameworks carry 60% maintenance overhead, so weigh that cost carefully. For short-term or MVP-stage projects, manual testing is more practical since the setup cost of automation isn’t justified yet.

Jesus Carmelo Arguelles, aka Mel, is a Content Marketing Specialist by profession. Though he holds a bachelor’s degree in business administration, he also took courses in fields like computer troubleshooting and data analytics. He also has a wealth of experience in content writing, marketing, education, and customer support.